Speculators is a unified library for building, training and storing speculative decoding algorithms for large language model (LLM) inference, including in frameworks like vLLM. Speculative decoding is a lossless technique that speeds up LLM inference by using a smaller, faster draft model (i.e "the speculator") to propose tokens, which are then verified by the larger base model, reducing latency without compromising output quality. The speculator intelligently drafts multiple tokens ahead of time, and the base model verifies them in a single forward pass. This approach boosts performance without sacrificing output quality, as every accepted token is guaranteed to match what the main model would have generated on its own.

Speculators standardizes this process by providing a productionized end-to-end framework to train draft models with reusable formats and tools. Trained models can seamlessly run in vLLM, enabling the deployment of speculative decoding in production-grade inference servers.

💬 Join us on the vLLM Community Slack and share your questions, thoughts, or ideas in:

#speculators#feat-spec-decode

- Offline Training Data Generation using vLLM: Enable the generation of hidden states using vLLM. Data samples are saved to disk and can be used for draft model training.

- Draft Model Training Support: E2E training support of single and multi-layer draft models. Training is supported for both non-MoE and MoE models. VL Training is coming soon.

- Standardized, Extensible Format: Provides a Hugging Face-compatible format for defining speculative models, with tools to convert from external research repositories into a standard speculators format for easy adoption.

- Seamless vLLM Integration: Built for direct deployment into vLLM, enabling low-latency, production-grade inference with minimal overhead.

The following table summarizes the models that have been trained end-to-end by our team as well as others in the roadmap:

| Verifier Architecture | Verifier Size | Training Support | vLLM Deployment Support |

|---|---|---|---|

| Llama | 8B-Instruct | EAGLE-3 ✅ | ✅ |

| 70B-Instruct | EAGLE-3 ✅ | ✅ | |

| Qwen3 | 8B | EAGLE-3 ✅ | ✅ |

| 14B | EAGLE-3 ✅ | ✅ | |

| 32B | EAGLE-3 ✅ | ✅ | |

| gpt-oss | 20b | EAGLE-3 ✅ | ✅ |

| 120b | EAGLE-3 ✅ | ✅ | |

| Qwen3 MoE | 235B-A22B | EAGLE-3 ✅ | ✅ |

| Qwen3-VL | 235B-A22B | EAGLE-3 ⏳ | ⏳ |

| Mistral 3 Large | 675B-Instruct | EAGLE-3 ⏳ | ⏳ |

✅ = Supported, ⏳ = In Progress, ❌ = Not Yet Supported

End-To-End Training Examples:

Models trained through Speculators can run seamlessly in vLLM using a simple vllm serve <speculator_model> command. This will run the model in vLLM using default arguments, defined in the speculator_config of the model's config.json.

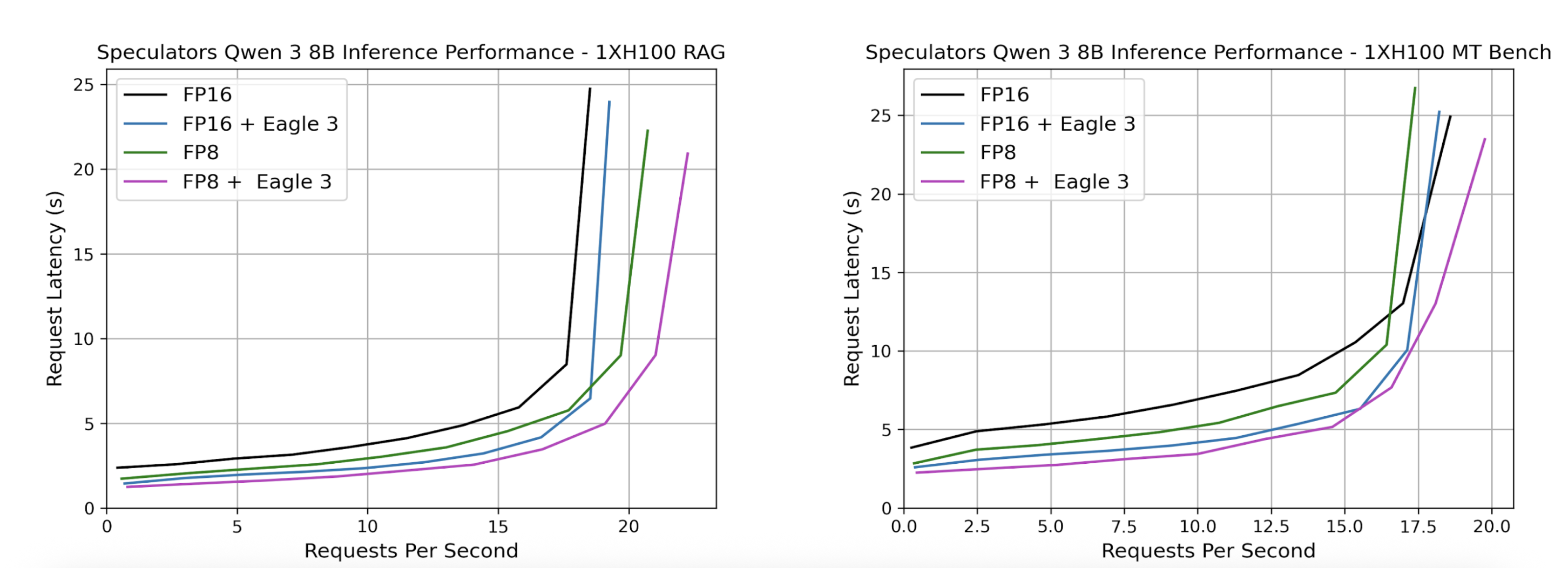

VLLM_USE_V1=1 vllm serve RedHatAI/Qwen3-8B-speculator.eagle3Served models can then be benchmarked using GuideLLM. Below, we show sample benchmark results where we compare our speculator with its dense counterpart. We also additionally compare quantization to explore additional performance improvements by swapping the dense verifier, Qwen/Qwen3-8B with the quantized FP8 model, RedHatAI/Qwen3-8B-FP8-dynamic in the speculator_config.

Before installing, ensure you have the following:

- Operating System: Linux or macOS

- Python: 3.10 or higher

- Package Manager: pip (recommended) or conda

Install the latest stable release from PyPI:

pip install speculatorsFor the latest development version or to contribute to the project:

git clone https://github.com/vllm-project/speculators.git

cd speculators

pip install -e .For development with additional tools:

pip install -e ".[dev]"To enable the generation of data (i.e hidden states) from vLLM for speculator training:

pip install -e ".[datagen]"You can verify your installation by checking the version:

speculators --versionOr by importing the package in Python:

import speculators

print(speculators.__version__)Speculators is licensed under the Apache License 2.0.

If you find Speculators helpful in your research or projects, please consider citing it:

@misc{speculators2025,

title={Speculators: A Unified Library for Speculative Decoding Algorithms in LLM Serving},

author={Red Hat},

year={2025},

howpublished={\url{https://github.com/vllm-project/speculators}},

}